We live in an age of precision. Our digital world is built on perfect ones and zeroes, flawless calculations, and unwavering logic. We expect our computers to be unerringly accurate, executing billions of instructions without a single misstep. And for good reason – this quest for perfection has powered everything from financial algorithms to space missions.

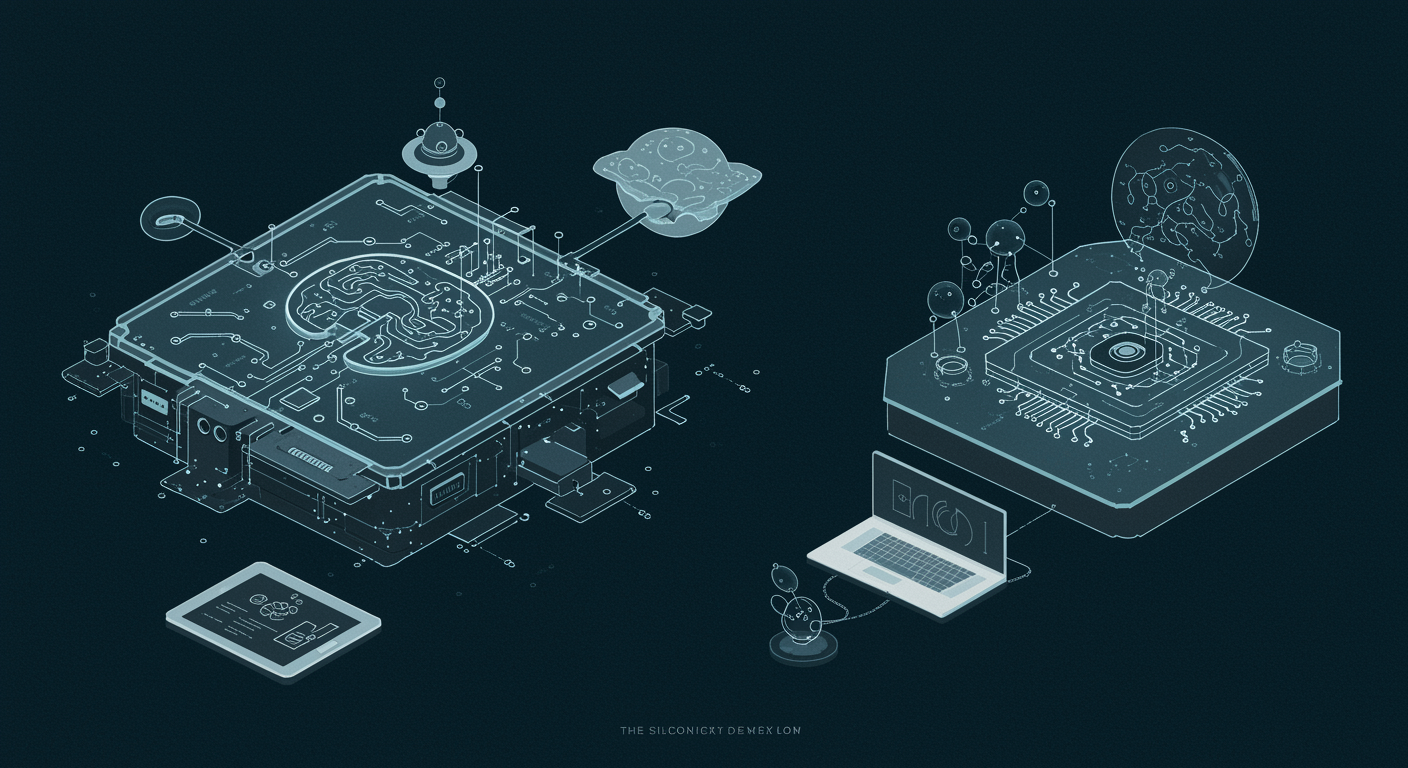

But what if this very pursuit of flawless digital machinery is holding us back from humanity’s next great leap in computing? What if the key to unlocking truly intelligent, energy-efficient AI doesn’t lie in more perfect silicon, but in embracing imperfection? Welcome to the “Silicon Paradox,” a fascinating twist in the narrative of technology that suggests our future computers might need to be a little…messier.

The Tyranny of Perfection: How Our Computers Work (and Why It’s Limiting)

Think about your computer right now. At its heart lies the famous von Neumann architecture. Data is stored in one place (memory), and the processor retrieves it, crunches numbers, and then sends it back. This “data shuffling” between memory and processor, while incredibly precise, creates a bottleneck. It’s like a chef (processor) constantly running back and forth to the pantry (memory) for every single ingredient, even if the recipe is simple. For complex, real-time tasks, especially in modern AI where massive datasets are involved, this constant data movement consumes enormous amounts of energy and time.

Furthermore, our digital computers are clock-driven. Every operation is synchronized to an internal clock, ticking billions of times a second. This ensures perfect order, but it also means that even if a part of the chip has nothing to do, it’s still consuming power, waiting for the next clock cycle. This relentless pursuit of perfect, synchronized execution, while excellent for spreadsheets and databases, becomes a massive energy drain when trying to emulate the organic, chaotic brilliance of a brain.

Consider this surprising fact: A human brain operates on roughly 20 watts of power – less than a dim lightbulb – yet it can perform feats of perception, learning, and reasoning that even the most powerful supercomputers struggle with, often consuming megawatts. This stark difference in energy efficiency is the core of the Silicon Paradox. Our brains are not digital, they’re analog. They don’t run on clock cycles, and they are, in many ways, gloriously imperfect.

Embracing the “Mess”: The Brain as Our Blueprint

So, what does an “imperfect” computer look like? For inspiration, we need only look inward. Our brains are masterpieces of biological innovation. They consist of billions of neurons, each a tiny processor, connected by trillions of synapses. These connections aren’t fixed; they strengthen or weaken based on experience, a phenomenon called plasticity. This is how we learn.

Here’s the kicker: neurons don’t operate with perfect digital precision. They “fire” when enough electrochemical signals accumulate. This firing is an analog event, influenced by noise and subtle variations. Learning isn’t about perfectly rewriting memory bits; it’s about gradually adjusting the strength of these noisy, analog synaptic connections. The brain is also incredibly parallel – countless operations happen simultaneously, without a central clock orchestrating every move. And crucially, it’s fault-tolerant; a few dead neurons won’t crash the whole system. Our brains are inherently robust to error, which is a feature, not a bug.

This brings us to neuromorphic computing – the attempt to build computer hardware that mimics the brain’s structure and function. Instead of separating memory and processing, neuromorphic chips integrate them, much like how neurons and synapses are intertwined. They use “spiking” neurons that only activate when needed (event-driven), saving immense power.

From Silicon Perfection to Silicon Approximation: Real-World Examples

This isn’t just theoretical fancy; it’s a rapidly advancing field of digital innovation.

- IBM TrueNorth: One of the earliest pioneers, TrueNorth chips feature millions of “neurosynaptic cores” that communicate via asynchronous spikes, not clock cycles. It’s incredibly energy-efficient for specific tasks like pattern recognition. Imagine a future where your phone processes complex visual data using minuscule power, learning as it goes, thanks to a chip inspired by this concept.

- Intel Loihi: Intel’s neuromorphic research chip also employs spiking neural networks. What makes Loihi particularly exciting is its ability to learn on the chip itself. It can adapt and train in real-time, performing tasks like gesture recognition or autonomous robot control with significantly lower energy consumption than traditional processors. This hints at a future where devices learn continuously from their environment, becoming more intelligent over time without needing constant cloud connection.

- Memristors: These fascinating electronic components are often called the “fourth fundamental circuit element” after resistors, capacitors, and inductors. Why are they so important? Because they can remember how much electrical charge has passed through them, effectively acting as both memory and a processing unit in a single component. This makes them ideal candidates for mimicking synapses, enabling analogue, brain-like computation directly in the memory cells. Imagine a computer where calculations happen inside the storage unit, eliminating the von Neumann bottleneck. This is a monumental shift for computer architecture.

These “brain-inspired” systems don’t aim for perfect mathematical precision. They operate with approximation, tolerate noise, and often give “good enough” answers rather than numerically exact ones. But for the kind of tasks humans excel at – like recognizing a face in a crowd, understanding spoken language, or navigating a chaotic environment – “good enough” is precisely what’s needed, and it’s vastly more energy-efficient. The subtle variations and inherent noise in these analog systems can even be beneficial, helping the networks explore different solutions and learn more effectively, much like how random fluctuations are thought to play a role in brain plasticity.

The Broader Implications: A Smarter, Greener, More Adaptive Future

The implications of this shift are profound for technology.

Firstly, energy efficiency is paramount. As AI models grow ever larger and more power-hungry, neuromorphic computing offers a pathway to sustainable digital intelligence. Imagine data centers consuming a fraction of the electricity they do today, or smart devices that can perform complex AI tasks for days or weeks on a single charge. This could dramatically reduce the carbon footprint of our increasingly intelligent world.

Secondly, this approach opens doors to entirely new capabilities. Brain-inspired computers excel at continuous, unsupervised learning, pattern recognition, and robust decision-making in unpredictable environments. This could lead to genuinely autonomous robots that learn from experience in the real world, advanced medical diagnostics that can adapt to individual patient data, or even materials science innovation driven by AI that can intuit novel structures.

Finally, it forces us to rethink the very foundation of computer science. We are moving from a purely deterministic, clock-driven paradigm to one that embraces probabilistic reasoning, event-driven processing, and inherent fault tolerance. This isn’t just about building faster chips; it’s about building fundamentally different kinds of intelligence, ones that might finally bridge the gap between silicon and biology.

The Next Leap: Embracing the Brain’s Beautiful Imperfections

The Silicon Paradox teaches us that sometimes, the most advanced solutions aren’t found in striving for absolute perfection, but in understanding and even embracing the elegant imperfections of nature. Our journey with technology has always been about pushing boundaries, and now, we’re looking to the most complex and efficient computer we know – the human brain – for our next great leap.

The future of computing might not be about how perfectly we can calculate numbers, but how robustly and efficiently we can interpret the messy, analog world around us. By building computers that learn, adapt, and even make mistakes with grace, we might just unlock a level of intelligence that redefines what it means to be digital, paving the way for truly transformative AI and innovation for humanity. What do you think this new era of “imperfect” machines will bring? The possibilities are as vast and varied as the neural connections in our own minds.